Back-Propagation Equations derivation for Multi-Layer Perceptron

2025-06-22

1 Multi-Layer Perceptron Model

In this work, an attempt to derive the back-propagation equations for an MLP was made and succeeded. The derivation was generalized that can be extended to deep fully-connected networks.

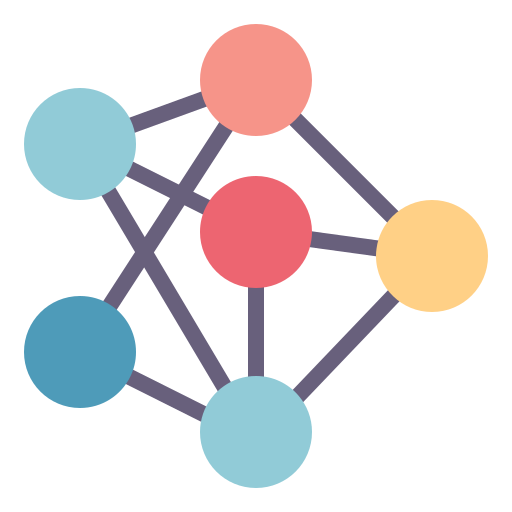

For the example derivation, an MLP with shown architecture was considered.

| \(n\ \ \) | Input size | \(= 2\) |

| \(N^{(1)}\) | Hidden neurons | \(= 3\) |

| \(N^{(2)}\) | Output size | \(= 2\) |

2 MLP equations

2.1 Layer 1 equations

\[ \begin{align*} z_1^{(1)} = w_{11}^{(1)} x_1 + w_{12}^{(1)} x_2 + b_1^{(1)} \\ z_2^{(1)} = w_{21}^{(1)} x_1 + w_{22}^{(1)} x_2 + b_2^{(1)} \\ z_3^{(1)} = w_{31}^{(1)} x_1 + w_{32}^{(1)} x_2 + b_3^{(1)} \\ \end{align*} \] \[ \textbf{z}^{(1)} = \textbf{W}^{(1)} \textbf{x} + \textbf{b}^{(1)} \]

In matrix form, the pre-activation vector will be \[ \begin{align*} \textbf{z}^{(1)} = \begin{bmatrix} z_{1}^{(1)} \\ z_{2}^{(1)} \\ z_{3}^{(1)} \end{bmatrix}_{N^{(1)}\times 1} = \begin{bmatrix} w_{11}^{(1)} & w_{12}^{(1)} \\ w_{21}^{(1)} & w_{22}^{(1)} \\ w_{31}^{(1)} & w_{32}^{(1)} \\ \end{bmatrix}_{N^{(1)}\times n} \begin{bmatrix} x_{1} \\ x_{2} \\ \end{bmatrix}_{n \times 1} + \begin{bmatrix} b_{1}^{(1)} \\ b_{2}^{(1)} \\ b_{3}^{(1)} \\ \end{bmatrix}_{N^{(1)}\times 1} \end{align*} \]

With activation function \(h(.)\),

\[ \begin{align*} \textbf{a}^{(1)} = \begin{bmatrix} a_{1}^{(1)} \\ a_{2}^{(1)} \\ a_{3}^{(1)} \end{bmatrix}_{N^{(1)}\times 1} = h\left( \begin{bmatrix} z_{1}^{(1)} \\ z_{2}^{(1)} \\ z_{3}^{(1)} \\ \end{bmatrix}\right)_{N^{(1)}\times 1} \end{align*} \]

2.2 Layer 2 equations

\[ \begin{align*} z_1^{(2)} = w_{11}^{(2)} a_1^{(1)} + w_{12}^{(2)} a_2^{(1)} + w_{13}^{(2)} a_3^{(1)} + b_1^{(1)} \\ z_2^{(2)} = w_{21}^{(2)} a_1^{(1)} + w_{22}^{(2)} a_2^{(1)} + w_{23}^{(2)} a_3^{(1)} + b_2^{(1)} \\ \end{align*} \] \[ \textbf{z}^{(2)} = \textbf{W}^{(2)} \textbf{a}^{(1)} + \textbf{b}^{(2)} \]

In matrix form, the pre-activation vector will be \[ \begin{align*} \textbf{z}^{(2)} = \begin{bmatrix} z_{1}^{(2)} \\ z_{2}^{(2)} \\ \end{bmatrix}_{N^{(2)}\times 1} = \begin{bmatrix} w_{11}^{(2)} & w_{12}^{(2)} & w_{13}^{(2)} \\ w_{21}^{(2)} & w_{22}^{(2)} & w_{23}^{(2)} \\ \end{bmatrix}_{N^{(2)}\times N^{(1)}} \begin{bmatrix} a_{1}^{(1)} \\ a_{2}^{(1)} \\ a_{3}^{(1)} \\ \end{bmatrix}_{N^{(1)} \times 1} + \begin{bmatrix} b_{1}^{(2)} \\ b_{2}^{(2)} \\ \end{bmatrix}_{N^{(2)}\times 1} \end{align*} \]

With activation function \(o(.)\), \[ \begin{align*} \hat{\textbf{y}} = \begin{bmatrix} \hat{y}_{1} \\ \hat{y}_{2} \\ \end{bmatrix}_{N^{(2)}\times 1} = o\left( \begin{bmatrix} z_{1}^{(2)} \\ z_{2}^{(2)} \\ \end{bmatrix}\right)_{N^{(2)}\times 1} \end{align*} \]

3 Back propagation equations derivation

3.1 Loss equation

Taking mean-squared-error as loss function, and taking loss in vector form

\[ \vec{L} = \begin{bmatrix} L_1 \\ L_2 \end{bmatrix} = \textbf{y} - \hat{\textbf{y}} = \begin{bmatrix} \frac{1}{2}\left(\hat{y}_1 - y_1\right)^2 \\ \frac{1}{2}\left(\hat{y}_2 - y_2\right)^2 \\ \end{bmatrix}_{N^{(2)}\times 1} \]

Advantages of taking loss in vetor form

Dimension consistency during back-propagation is achieved

Individual component loss in the output vector can be analyzed during training

But, the sum of all components i.e. \(L = \sum_{i=1}^2\frac{1}{2}\left(\hat{y}_i-y_i\right)^2\) will be used to check for model training convergence.

3.2 Loss derivative w.r.t. \(\hat{\textbf{y}}\) and \(\textbf{z}^{(2)}\)

Both \(\vec{L}\) and \(\hat{\textbf{y}}\) are vectors, so the resulting derivative will be a matrix (Jacobian matrix).

\[ \begin{align*} \frac{\partial \vec{L}}{\partial \hat{\textbf{y}}} &= \begin{bmatrix} \frac{\partial L_1}{\partial \hat{y}_1} & \frac{\partial L_1}{\partial \hat{y}_2} \\ \frac{\partial L_2}{\partial \hat{y}_1} & \frac{\partial L_2}{\partial \hat{y}_2} \\ \end{bmatrix} = \begin{bmatrix} \left(\hat{y}_1 - y_1\right) & 0 \\ 0 & \left(\hat{y}_2 - y_2\right) \\ \end{bmatrix}_{N^{(2)} \times N^{(2)}} \end{align*} \]

Similarly, \(\vec{L}\) and \(\textbf{z}^{(2)}\) are vectors, so the resulting derivative will also be a matrix as derived below.

\[ \begin{align*} \frac{\partial \vec{L}}{\partial \textbf{z}^{(2)}} = \frac{\partial \vec{L}}{\partial \hat{\textbf{y}}} \frac{\partial \hat{\textbf{y}}}{\partial \textbf{z}^{(2)}} = \frac{\partial \vec{L}}{\partial \hat{\textbf{y}}} \begin{bmatrix} \frac{\partial \hat{y}_1}{\partial z_1^{(2)}} & \frac{\partial \hat{y}_1}{\partial z_2^{(2)}} \\ \frac{\partial \hat{y}_2}{\partial z_1^{(2)}} & \frac{\partial \hat{y}_2}{\partial z_2^{(2)}} \\ \end{bmatrix}_{N^{(2)} \times N^{(2)}} = \frac{\partial \vec{L}}{\partial \hat{\textbf{y}}} \begin{bmatrix} o'(z_1) & 0 \\ 0 & o'(z_2) \\ \end{bmatrix}_{N^{(2)} \times N^{(2)}} = \delta_{N^{(2)} \times N^{(2)}}^{(2)} \end{align*} \]

3.3 Loss derivative w.r.t. \(\textbf{b}^{(2)}\)

Here also, \(\vec{L}\) and \(\textbf{b}^{(2)}\) are vectors, resulting in a matrix as shown below.

\[ \frac{\partial \vec{L}}{\partial \textbf{b}^{(2)}} = \frac{\partial \vec{L}}{\partial \textbf{z}^{(2)}} \frac{\partial \textbf{z}^{(2)}}{\partial \textbf{b}^{(2)}} = \delta^{(2)} \begin{bmatrix} \frac{\partial z_1^{(2)}}{\partial b_1^{(2)}} & \frac{\partial z_1^{(2)}}{\partial b_2^{(2)}} \\ \frac{\partial z_2^{(2)}}{\partial b_1^{(2)}} & \frac{\partial z_2^{(2)}}{\partial b_2^{(2)}} \\ \end{bmatrix} = \delta^{(2)} \begin{bmatrix} 1 & 0 \\ 0 & 1 \\ \end{bmatrix} = \delta^{(2)}_{N^{(2)}\times N^{(2)}} \]

3.4 Loss derivative w.r.t. \(\textbf{W}^{(2)}\)

Here, \(\vec{L}\) and \(\textbf{W}^{(2)}\) are vector and matrix respectively. Hence, the resulting derivative will be a tensor as shown below.

\[ \begin{align*} \frac{\partial \vec{L}}{\partial \textbf{W}^{(2)}} &= \frac{\partial \vec{L}}{\partial \textbf{z}^{(2)}} \frac{\partial \textbf{z}^{(2)}}{\partial \textbf{W}^{(2)}} = \delta^{(2)} \left[ \begin{bmatrix} \frac{\partial z_1^{(2)}}{\partial w_{11}^{(2)}} & \frac{\partial z_1^{(2)}}{\partial w_{12}^{(2)}} & \frac{\partial z_1^{(2)}}{\partial w_{13}^{(2)}} \\ \frac{\partial z_1^{(2)}}{\partial w_{21}^{(2)}} & \frac{\partial z_1^{(2)}}{\partial w_{22}^{(2)}} & \frac{\partial z_1^{(2)}}{\partial w_{23}^{(2)}} \\ \end{bmatrix} \begin{bmatrix} \frac{\partial z_1^{(2)}}{\partial w_{11}^{(2)}} & \frac{\partial z_1^{(2)}}{\partial w_{12}^{(2)}} & \frac{\partial z_1^{(2)}}{\partial w_{13}^{(2)}} \\ \frac{\partial z_1^{(2)}}{\partial w_{21}^{(2)}} & \frac{\partial z_1^{(2)}}{\partial w_{22}^{(2)}} & \frac{\partial z_1^{(2)}}{\partial w_{23}^{(2)}} \\ \end{bmatrix} \right]_{N^{(2)}\times N^{(2)} \times N^{(1)}} \\ &= \delta^{(2)}_{N^{(2)}\times N^{(2)}} \left[ \begin{bmatrix} a_1^{(1)} & a_2^{(1)} & a_3^{(1)} \\ 0 & 0 & 0 \\ \end{bmatrix} \begin{bmatrix} 0 & 0 & 0 \\ a_1^{(1)} & a_2^{(1)} & a_3^{(1)} \\ \end{bmatrix} \right]_{N^{(2)}\times N^{(2)} \times N^{(1)}} \\ \end{align*} \]

The resulting derivative \(\frac{\partial \vec{L}}{\partial \textbf{W}^{(2)}}\) will be a tensor of size \(N^{(2)}\times N^{(2)}\times N^{(1)}\).

3.5 Loss derivative w.r.t. \(\textbf{a}^{(1)}\)

Here, \(\vec{L}\) and \(\textbf{a}^{(1)}\) are vectors. Hence resulting derivative will be a matrix.

\[ \begin{align*} \frac{\partial \vec{L}}{\partial \textbf{a}^{(1)}} &= \frac{\partial \vec{L}}{\partial \textbf{z}^{(2)}} \frac{\partial \textbf{z}^{(2)}}{\partial \textbf{a}^{(1)}} = \delta^{(2)} \begin{bmatrix} \frac{\partial z_1^{(2)}}{\partial a_{1}^{(1)}} & \frac{\partial z_1^{(2)}}{\partial a_{2}^{(1)}} & \frac{\partial z_1^{(2)}}{\partial a_{3}^{(1)}} \\ \frac{\partial z_2^{(2)}}{\partial a_{1}^{(1)}} & \frac{\partial z_2^{(2)}}{\partial a_{2}^{(1)}} & \frac{\partial z_2^{(2)}}{\partial a_{3}^{(1)}} \\ \end{bmatrix} = \delta^{(2)} \begin{bmatrix} w_{11}^{(2)} & w_{12}^{(2)} & w_{13}^{(2)} \\ w_{21}^{(2)} & w_{22}^{(2)} & w_{23}^{(2)} \\ \end{bmatrix} \\ &= \delta^{(2)}_{N^{(2)}\times N^{(2)}} \textbf{W}^{(2)}_{N^{(2)}\times N^{(1)}} \end{align*} \]

The gradient \(\frac{\partial \vec{L}}{\partial \textbf{a}^{(1)}}\) is a matrix of size \({N^{(2)}\times N^{(1)}}\)

3.6 Loss derivative w.r.t. \(\textbf{z}^{(1)}\)

Here also, \(\vec{L}\) and \(\textbf{z}^{(1)}\) are vectors, resulting derivative in matrix form as shown below.

\[ \begin{align*} \frac{\partial \vec{L}}{\partial \textbf{z}^{(1)}} &= \frac{\partial \vec{L}}{\partial \textbf{a}^{(1)}} \frac{\partial \textbf{a}^{(1)}}{\partial \textbf{z}^{(1)}} = \frac{\partial \vec{L}}{\partial \textbf{a}^{(1)}}_{N^{(2)} \times N^{(1)}} \begin{bmatrix} \frac{\partial a_1^{(1)}}{\partial z_1^{(1)}} & \frac{\partial a_1^{(1)}}{\partial z_2^{(1)}} & \frac{\partial a_1^{(1)}}{\partial z_3^{(1)}} \\ \frac{\partial a_2^{(1)}}{\partial z_1^{(1)}} & \frac{\partial a_2^{(1)}}{\partial z_2^{(1)}} & \frac{\partial a_2^{(1)}}{\partial z_3^{(1)}} \\ \frac{\partial a_3^{(1)}}{\partial z_1^{(1)}} & \frac{\partial a_3^{(1)}}{\partial z_2^{(1)}} & \frac{\partial a_3^{(1)}}{\partial z_3^{(1)}} \\ \end{bmatrix}_{N^{(1)} \times N^{(1)}} \\ & = \frac{\partial \vec{L}}{\partial \textbf{a}^{(1)}}_{N^{(2)} \times N^{(1)}} \begin{bmatrix} h'(z_1^{(1)}) & 0 & 0 \\ 0 & h'(z_2^{(1)}) & 0 \\ 0 & 0 & h'(z_3^{(1)}) \\ \end{bmatrix}_{N^{(1)} \times N^{(1)}} \\ &= \delta^{(1)}_{N^{(2)}\times N^{(1)}} \end{align*} \]

3.7 Loss derivative w.r.t. \(\textbf{b}^{(1)}\)

Here also, \(\vec{L}\) and \(\textbf{b}^{(1)}\) are vectors, resulting derivative in matrix form as shown below.

\[ \begin{align*} \frac{\partial \vec{L}}{\partial \textbf{b}^{(1)}} &= \frac{\partial \vec{L}}{\partial \textbf{z}^{(1)}} \frac{\partial \textbf{z}^{(1)}}{\partial \textbf{b}^{(1)}} = \delta^{(1)}_{N^{(2)} \times N^{(1)}} \begin{bmatrix} \frac{\partial z_1^{(1)}}{\partial b_1^{(1)}} & \frac{\partial z_1^{(1)}}{\partial b_2^{(1)}} & \frac{\partial z_1^{(1)}}{\partial b_3^{(1)}} \\ \frac{\partial z_2^{(1)}}{\partial b_1^{(1)}} & \frac{\partial z_2^{(1)}}{\partial b_2^{(1)}} & \frac{\partial z_2^{(1)}}{\partial b_3^{(1)}} \\ \frac{\partial z_3^{(1)}}{\partial b_1^{(1)}} & \frac{\partial z_3^{(1)}}{\partial b_2^{(1)}} & \frac{\partial z_3^{(1)}}{\partial b_3^{(1)}} \\ \end{bmatrix}_{N^{(1)} \times N^{(1)}} \\ & = \delta^{(1)}_{N^{(2)} \times N^{(1)}} \begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \\ \end{bmatrix}_{N^{(1)} \times N^{(1)}} \\ &= \delta^{(1)}_{N^{(2)}\times N^{(1)}} \end{align*} \]

3.8 Loss derivative w.r.t. \(\textbf{W}^{(1)}\)

Here, \(\vec{L}\) and \(\textbf{W}^{(1)}\) are vector and matrix respectively, resulting in a derivative in tensor form as shown below.

\[ \begin{align*} \frac{\partial \vec{L}}{\partial \textbf{W}^{(1)}} = \frac{\partial \vec{L}}{\partial \textbf{z}^{(1)}} \frac{\partial \textbf{z}^{(1)}}{\partial \textbf{W}^{(1)}} &= \delta^{(1)} \left[ \begin{bmatrix} \frac{\partial z_1^{(1)}}{\partial w_{11}^{(1)}} & \frac{\partial z_1^{(1)}}{\partial w_{12}^{(1)}} \\ \frac{\partial z_1^{(1)}}{\partial w_{21}^{(1)}} & \frac{\partial z_1^{(1)}}{\partial w_{22}^{(1)}} \\ \frac{\partial z_1^{(1)}}{\partial w_{31}^{(1)}} & \frac{\partial z_1^{(1)}}{\partial w_{32}^{(1)}} \\ \end{bmatrix} \begin{bmatrix} \frac{\partial z_2^{(1)}}{\partial w_{11}^{(1)}} & \frac{\partial z_2^{(1)}}{\partial w_{12}^{(1)}} \\ \frac{\partial z_2^{(1)}}{\partial w_{21}^{(1)}} & \frac{\partial z_2^{(1)}}{\partial w_{22}^{(1)}} \\ \frac{\partial z_2^{(1)}}{\partial w_{31}^{(1)}} & \frac{\partial z_2^{(1)}}{\partial w_{32}^{(1)}} \\ \end{bmatrix} \begin{bmatrix} \frac{\partial z_3^{(1)}}{\partial w_{11}^{(1)}} & \frac{\partial z_3^{(1)}}{\partial w_{12}^{(1)}} \\ \frac{\partial z_3^{(1)}}{\partial w_{21}^{(1)}} & \frac{\partial z_3^{(1)}}{\partial w_{22}^{(1)}} \\ \frac{\partial z_3^{(1)}}{\partial w_{31}^{(1)}} & \frac{\partial z_3^{(1)}}{\partial w_{32}^{(1)}} \\ \end{bmatrix} \right]_{N^{(1)}\times N^{(1)} \times n} \\ &= \delta^{(1)}_{N^{(2)} \times N^{(1)}} \left[ \begin{bmatrix} x_1 & x_2 \\ 0 & 0 \\ 0 & 0 \\ \end{bmatrix} \begin{bmatrix} 0 & 0 \\ x_1 & x_2 \\ 0 & 0 \\ \end{bmatrix} \begin{bmatrix} 0 & 0 \\ 0 & 0 \\ x_1 & x_2 \\ \end{bmatrix} \right]_{N^{(1)}\times N^{(1)} \times n} \end{align*} \]

Hence, the derivative \(\frac{\partial \vec{L}}{\partial \textbf{W}^{(1)}}\) is a tensor of size \({N^{(2)}\times N^{(1)} \times n}\).

4 Back-propagation equations summary

4.1 Gradient equations with dimensions

The gradient equations, extened to general form from the example network taken, are given below.

\[ \DeclareMathOperator{\diag}{diag} \DeclareMathOperator{\reshape}{reshape} \begin{align*} \frac{\partial \vec{L}}{\partial \hat{\textbf{y}}}&= \begin{bmatrix} \left(\hat{y}_1 - y_1\right) & 0 \\ 0 & \left(\hat{y}_2 - y_2\right) \\ \end{bmatrix}_{N^{(2)} \times N^{(2)}} = \diag((\hat{\textbf{y}} - \textbf{y}))_{N^{(2)}\times N^{(2)}} \\ \frac{\partial \vec{L}}{\partial \textbf{z}^{(2)}} &= \frac{\partial \vec{L}}{\partial \hat{\textbf{y}}} \begin{bmatrix} o'(z_1) & 0 \\ 0 & o'(z_2) \\ \end{bmatrix}_{N^{(2)} \times N^{(2)}} =\frac{\partial \vec{L}}{\partial \hat{\textbf{y}}} \diag(o'(\textbf{z}^{(2)})) = \delta_{N^{(2)} \times N^{(2)}}^{(2)} \\ \frac{\partial \vec{L}}{\partial \textbf{b}^{(2)}} &= \frac{\partial \vec{L}}{\partial \textbf{z}^{(2)}} \frac{\partial \textbf{z}^{(2)}}{\partial \textbf{b}^{(2)}} = \delta^{(2)}_{N^{(2)}\times N^{(2)}} \\ \frac{\partial \vec{L}}{\partial \textbf{W}^{(2)}} &= \frac{\partial \vec{L}}{\partial \textbf{z}^{(2)}} \frac{\partial \textbf{z}^{(2)}}{\partial \textbf{W}^{(2)}} = \delta^{(2)}_{N^{(2)}\times N^{(2)}} \left[ \begin{bmatrix} a_1^{(1)} & a_2^{(1)} & a_3^{(1)} \\ 0 & 0 & 0 \\ \end{bmatrix} \begin{bmatrix} 0 & 0 & 0 \\ a_1^{(1)} & a_2^{(1)} & a_3^{(1)} \\ \end{bmatrix} \right]_{N^{(2)}\times N^{(2)} \times N^{(1)}} \\ &= \delta_{N^{(2)}\times N^{(2)}}^{(2)} \reshape((\textbf{a}^{(1)})^T \otimes I_{N^{(2)}\times N^{(2)}}, (N^{(2)}\times N^{(2)}\times N^{(1)})) \end{align*} \]

Here, \(\otimes\) is the Kronecker productlink between the vector \(\textbf{a}^{(1)}\) and an Identity matrix \(I_{N^{(2)}\times N^{(2)}}\), resulting in a matrix of size \(N^{(2)}\times (N^{(2)}.N^{(1)})\). Then, \(\reshape\) operator will reshape the \(N^{(2)}\times (N^{(2)}.N^{(1)})\) matrix into a tensor of shape \(N^{(2)}\times N^{(2)}\times N^{(1)}\). The \(\reshape\) operator works on row-major-orderlink.

4.2 Gradient equations with dimensions - continued

\[ \begin{align*} \frac{\partial \vec{L}}{\partial \textbf{a}^{(1)}} &= \frac{\partial \vec{L}}{\partial \textbf{z}^{(2)}} \frac{\partial \textbf{z}^{(2)}}{\partial \textbf{a}^{(1)}} = \delta^{(2)}_{N^{(2)}\times N^{(2)}} \textbf{W}^{(2)}_{N^{(2)}\times N^{(1)}} \\ \frac{\partial \vec{L}}{\partial \textbf{z}^{(1)}} &= \frac{\partial \vec{L}}{\partial \textbf{a}^{(1)}} \frac{\partial \textbf{a}^{(1)}}{\partial \textbf{z}^{(1)}} = \frac{\partial \vec{L}}{\partial \textbf{a}^{(1)}}_{N^{(2)} \times N^{(1)}} \diag(h'(\textbf{z}^{(1)}))_{N^{(1)} \times N^{(1)}} = \delta^{(1)}_{N^{(2)}\times N^{(1)}} \\ \frac{\partial \vec{L}}{\partial \textbf{b}^{(1)}} &= \frac{\partial \vec{L}}{\partial \textbf{z}^{(1)}} \frac{\partial \textbf{z}^{(1)}}{\partial \textbf{b}^{(1)}} = \delta^{(1)}_{N^{(2)} \times N^{(1)}}\\ \frac{\partial \vec{L}}{\partial \textbf{W}^{(1)}} &= \frac{\partial \vec{L}}{\partial \textbf{z}^{(1)}} \frac{\partial \textbf{z}^{(1)}}{\partial \textbf{W}^{(1)}} = \delta^{(1)}_{N^{(2)} \times N^{(1)}} \left[ \begin{bmatrix} x_1 & x_2 \\ 0 & 0 \\ 0 & 0 \\ \end{bmatrix} \begin{bmatrix} 0 & 0 \\ x_1 & x_2 \\ 0 & 0 \\ \end{bmatrix} \begin{bmatrix} 0 & 0 \\ 0 & 0 \\ x_1 & x_2 \\ \end{bmatrix} \right]_{N^{(1)}\times N^{(1)} \times n} \\ &= \delta_{N^{(2)}\times N^{(1)}}^{(1)} \reshape((\textbf{x})^T \otimes I_{N^{(1)}\times N^{(1)}}, (N^{(1)}\times N^{(1)}\times n)) \end{align*} \]

Here also, \(\otimes\) represents Kronecker product and \(\reshape\) command forms the tensor from matrix. So, with these computed gradients the weights and bias of both the layers will be updated

4.3 Updating weights and biases

It can be noted that the gradients will have extra 1 dimension than their corresponding variables. For example, dimension of \(\frac{\partial \vec{L}}{\partial \textbf{W}^{(1)}}\) is 3, while the dimension of \(\textbf{W}^{(1)}\) is 2. The extra dimension is coming because of the loss components of size \(N^{(2)}\).

Each component in the outer-most dimension (for example, components of \(N^{(2)}\) in size of \(\frac{\partial \vec{L}}{\partial \textbf{W}^{(1)}}\) which is \(N^{(2)} \times N^{(1)} \times n\)) is the loss gradient of the particular component in the ouput vector \(\hat{\textbf{y}}\).

Thus, the weights and biases are updated by summing the gradient losses in the output (i.e. outer-most) dimension as given below.

Updating weights and biases

\[ \begin{align*} w^{(1)}_{i,j} :&= w^{(1)}_{i,j} - \alpha \sum_{k=1}^{N^{(2)}} \frac{\partial L_{k}}{\partial w^{(1)}_{k,i,j}} , \ i=1,2,\dots,N^{(1)}, \ j=1,2,\dots,n \\ b^{(1)}_{i} :&= b^{(1)}_{i} - \alpha \sum_{k=1}^{N^{(2)}} \frac{\partial L_{k}}{\partial b^{(1)}_{k,i}} , \ i=1,2,\dots,N^{(1)} \\ w^{(2)}_{i,j} :&= w^{(2)}_{i,j} - \alpha \sum_{k=1}^{N^{(2)}} \frac{\partial L_{k}}{\partial w^{(2)}_{k,i,j}} , \ i=1,2,\dots,N^{(2)}, \ j=1,2,\dots,N^{(1)} \\ b^{(2)}_{i} :&= b^{(2)}_{i} - \alpha \sum_{k=1}^{N^{(2)}} \frac{\partial L_{k}}{\partial b^{(2)}_{k,i}} , \ i=1,2,\dots,N^{(2)} \\ \end{align*} \]

where, \(\alpha\) is the learning rate.

5 Conclusion

In this work,

The flow of back-propagation in a fully connected neural network was understood and attempted to explain in mathematical sence.

The loss gradient equations were derived with generalization that can be extended to other types of networks like RNNs.

This work was made to get good base on mathematical background of FNNs that will be later extended for understanding and deriving RNN equations

Back propagation equations for MLP